Tuning Tips for Caching in the RadiantOne Federated Identity Service

This chapter provides guidelines on how to effectively use caching for optimal performance. The first part covers the different categories and levels of cache along with a quick review of the different use cases that justify a cache deployment. The second part provides details and describes the advantages and trade-off between “in-memory” and “persistent” cache. Finally, a description of cache refresh methods is reviewed. This is an essential and often overlooked aspect of cache management.

Persistent caching is only associated with the RadiantOne Federated Identity module and is irrelevant for RadiantOne Universal Directory.

When and why do you need a cache with RadiantOne FID?

There are many cases where RadiantOne is leveraged more for its flexibility rather than its pure speed. However, in most critical operations such as identification, authentication, and authorization, it needs to provide guaranteed fast access to information. In fact, in many cases RadiantOne needs to provide read operations that are faster than what can be delivered by the underlying sources. What is meant by “fast”, is a level of performance that is at least 3 to 5 times what can be derived from standard relational databases (RDBMS) – as an example.

An additional requirement, in some situations, is a fast “write” capability. A typical case is when security information needs to be logged into the directory for audit purposes at authentication time. The problem is that most directories are a lot slower than RDBMS when it comes to write operations. In this case, RadiantOne can forward the writes to faster transactional data stores.

The question then becomes: How can the RadiantOne FID service be faster than the underlying data sources despite the fact that it adds an extra layer of software and one more intermediate TCP/IP hop?

The answer is unambiguous, without caching, the RadiantOne FID service is always slower than the sources it has virtualized. In terms of overhead, the additional TCP/IP hop (an application talks to RadiantOne first and then it talks to the underlying sources) by itself, divides the throughput of the virtualized source by approximately half. If you include the dynamic transformations, the joins, and the reorganization of the underlying namespaces to this overhead (in short, all the value added by RadiantOne through on-the-fly processing), it is difficult to imagine how this service can be faster than the underlying sources with dynamic access alone.

For all the above reasons, a flexible and efficient cache strategy and cache refresh mechanism is an absolute necessity. In most sizeable identity and access management deployments, caching is not only required, but also depending on volume, scalability, resilience to failure, etc…different levels and types of cache (in-memory and/or persistent) are needed.

To better understand the different aspects of performance and cache for RadiantOne, we need to look at the architecture. At a high level, the architecture can be divided into two main layers:

-

A front-end layer which handles the protocol (LDAP or other standard protocols such as a Web Services, SPML, or SAML).

-

A back-end layer that oversees mapping/transforming the result set from the “virtualized” data sources (directories, databases and/or applications).

The performance of the RadiantOne service depends on a front-end layer that shares most of the logic of an LDAP server and as such can leverage the same optimization strategies. However, performance also depends even more on the back–end layer, which really represents the virtualization. This is where the secret for performance and scalability resides and, where a solid and scalable caching mechanism is indispensable.

Front-end performance

The RadiantOne front-end shares most of the layers of a “classic” LDAP directory and the same “potential” bottlenecks. They are essentially at:

-

The TCP/IP server (and client) connections

-

The first level of query parsing

TCP/IP Connections and Connection Pooling

The first bottleneck is common to any TCP/IP based server and not specific to LDAP servers. Even if a server could set aside resources (memory and handles) for an arbitrarily large number of connections, what the server can really support (the effective number of concurrent connections) is dictated by the underlying hardware platform, bandwidth and operating system. Once this level is reached, no matter how powerful the underlying hardware is (in terms of processing throughput), the server is idle and waiting for the establishment of the connections. In this case the only possible optimization at the TCP layer would be by using specific hardware such as TCP offload engines, more bandwidth, better routers and/or scaling out by load balancing.

The latency of a TCP/IP client connection is another point to consider in the terms of performance. The latency of a TCP/IP client connection, compared to the speed of the processor is extremely high. As a result, multiple connections and disconnections hamper the apparent throughput of any directory server. (An easy way to verify this fact is to run the “search rate” utility (as described in Testing RadiantOne Performance) against any LDAP directory with or without keeping the connection open after each search). With multiple connections/disconnections the search rate of a server drops to a quarter or a third of the normal throughput. At the same time the CPU of the server shows a lot of idle cycles. Most of the time is spent waiting for the establishment or re-establishment of the client TCP/IP connection. Since the RadiantOne service must connect to many distributed sources, it acts as a client to many TCP/IP servers, and so the cumulated latencies could be quite high. The solution to this problem consists in pooling the different connections by class of connected servers to reuse existing connections as much as possible. It is essentially a form of cache for an already open structure needed for a connection. Therefore, support for connection pooling is an important feature for RadiantOne in dynamic access mode (without caching any data at the level of the server). For details on connection pooling, please see Tuning Tips for Specific Types of Backend Data Sources.

First Level of Query Parsing

The second issue is the overhead generated by the parsing of incoming queries. Although a lot less expensive than TCP/IP overhead, the parsing time is not negligible. To optimize, RadiantOne couples a query cache with an entry cache. The idea is that by caching a frequently issued query and its result set (entry cache), significant server time can be saved. This strategy works well when information is not too volatile. The cache is equipped with a LRU (least recently used) eviction policy and TTL (time-to-live) marker for both queries and entries.

However, query cache and entry cache are not the panacea to addressing performance issues. Query cache is relatively blind and based essentially on syntax and not semantics: two queries yielding equivalent results but using a slightly different syntax are represented twice in the cache. Another limitation is the size of these caches. As volume increases, many factors start to negate the value of the approach. The cache refresh strategy is more complex, and latency in case of failure and cold restart (the cache needs to be re-populated before providing its performance boost) can be stumbling blocks. For these reasons, these categories of caches in RadiantOne are used essentially as a performance enhancer rather than the base for server speed. If correctly sized, such a cache brings a 15 to 30% performance boost to a server (unless the whole dataset is quite small and could fit entirely in memory). The next section explains that the key to scalability and sustainable performance for a directory with significant volume rests upon its indexing strategy for entries and access paths.

Back-end Performance

The “Secret” of LDAP Directory Speed

The beginning of this chapter referred to the speed of a directory being primarily focused on reads. So, how fast is fast? Between 1500 to 2000 queries per second/per GHZ/per processor (Pentium IV class machine on a Windows Server or Linux) for LDAP (Sun or Netscape 4.x to 5.x) versus 150 to 300 queries per second for a RDBMS (Oracle, DB2, or SQL Server) for a standard entry search with fully indexed queries. The size of an entry for these performance numbers is about 512 bytes, and the number of entries in the LDAP directory was 2 million. These numbers reflect a search operation for identification purposes (login). This performance is quite stable, even if the volume of entries goes up to 80 to 100 million entries. However, the size of an entry is a factor in terms of performance. At 2 KB or more per entry the search rate starts to drop quickly.

The performance secret of an LDAP server when it comes to read and search operations is a relatively simple structure for queries and operations, which yields a much simpler access method and storage strategy (nothing to be compared with the richness and capabilities of SQL, but also none of the optimizations and complexity required of a full RDBMS). Essentially, an LDAP directory can be implemented very efficiently with a classic approach of fully indexed data files based on B+ trees (RDBMS uses the same kind of storage and access methods, however their access plans are a lot more complex, involve a lot of processing, many indexes and optimizations for full support of relational operations).

Due to their relative simplicity, LDAP directories fully benefit from the classic advantages of B+ tree indexing. Searching for an entry based on a fully indexed attribute can be delivered in a guaranteed time and scale quite well. Even without page caching, a B+ tree index can retrieve an indexed attribute (in the worst case) in no more than log N disk accesses, N being the number of entries. This simple and robust structure explains the performance and stability of LDAP directories when it comes to read even with a significant number of entries (100 million entries or more). More importantly, this level of speed can be guaranteed even with a relatively modest amount of main memory, again one of the strong points for B+Trees. However, the story in terms of writes is not as good. Writing is an expensive operation, which does not scale well when the volume of entries increases. If this constraint is added, the fact that an LDAP directory must maintain many indexes (ideally as many as potentially “searchable” attributes), you can see that the number of updates is the key factor that limits the scalability of a classic directory. As is well documented, a directory is optimized for read with a modest amount of writes.

The Different Caching Strategies

Where are the bottlenecks for the RadiantOne service? As described in this chapter, when it comes to the front-end layer read/search speed, RadiantOne is comparable to a classic directory. The optimizations at this level are also similar. The target is essentially to optimize the TCP/IP connections and to re-use the previous queries and corresponding results sets (Query cache and entry cache - see previous sections) when and where possible.

The major bottleneck is at the level of the back-end

By definition, a federated identity layer does not own any specialized back-end like a classic LDAP server (which as we have seen is the secret to speed and scalability with modest memory requirements). By construction, a federated identity layer needs access to the underlying “virtualized” data sources. Without a caching strategy, a federated identity layer acts simply as a proxy and forwards the calls to the underlying sources. Without caching, even with the best optimization at the front-end, RadiantOne FID can only deliver a fraction of the speed of the underlying sources. If the “virtualized” sources are fast in terms of read operations and if the virtualization overhead is acceptable then dynamic access alone to the source data may be a viable strategy.

However in most cases (e.g. when databases and/or Web Services are involved and volume is significant), a back-end caching mechanism is a requirement. A complete federated identity layer needs to offer different levels of cache with different cache refresh implementation strategies matching different use cases.

There are essentially two forms of cache:

-

In-memory cache In this approach, cached entries are stored solely in memory. In terms of implementation, this approach has the advantage of simplicity. However in practice, this solution may present many potential issues depending on the use case. In most cases, memory cache works when the volume of entries and the complexity of the queries are modest. However, with sizeable volume (and often the flexibility of a federated identity service tends to yield many use cases which quickly add an increased demand in terms of memory) and the variable latencies and volatility (update rates) of the virtualized data sources, it is difficult to guarantee the performance of a memory cache solution. The greatest risks with a memory cache result when the query pattern is not predictable and the data set volume exceeds the size of memory. Furthermore, some categories of directory views are not good candidates for caching because the operation can never guarantee that all possible observable results are retrieved at the right time. If the volatility of the underlying data store is high, and the volume of data is significant, then a memory cache with a time-to-live refresh strategy alone is very difficult to put in place and will not guarantee an accurate “image” or will generate excessive refresh volume negating the advantages of the cache. As a result, providing guaranteed performance is difficult if not impossible. Moreover, as volume increases or when queries needed to build the virtual image are more complex, the latency incurred by accessing the underlying sources just to rebuild the memory cache after a cold restart becomes more and more problematic. As a consequence, memory cache provides performance improvements only in very specific cases. Memory cache needs to be considered more like a partial/incremental improvement boost rather than a complete solution to guaranteed performance.

-

Disk-based cache also called “persistent cache” In this approach, images of the virtual entries are stored in the local RadiantOne Universal Directory. This approach allows for fast recovery in case of failure. The whole virtual tree could be cached this way and a large volume of entries can be supported (hundreds of millions entries - essentially no practical limit if combined with partitioning and clusters). The challenge then becomes how to access this disk cache selectively at the level of each entry and at the same speed than the fastest classic LDAP server. The answer is pretty straightforward even if its implementation is not: build a persistent cache, which is a full LDAP V3 server. All the advantages described for the classic LDAP directory speed apply here. The persistent cache becomes the equivalent of what in the RDBMS world we would call a “materialized” view of a complex directory tree stored in an LDAP format and transparently refreshed by polling or pushing events (triggers or logs) at the level of the data sources. In fact, the complexity here resides essentially in the cache refresh mechanism. The good news is that by leveraging good abstraction and representation (data modeling and metadata) of the different data sources, a completely automated solution is possible. One can say that in this case, a bit paradoxically, good abstraction and virtualization ends up into an always “synchronized” and persistent directory view. The major difference though with classic synchronization resides in the simplicity and the ease of deployment. Virtualization and good data modeling yield an automated solution where transformation, reconciliation, joins, caching, and synchronization is totally transparent to the RadiantOne administrator and reduced to a fairly simple configuration.

Each of these caching mechanisms can be refreshed using different methods.

Cache refresh strategies can be divided into two main categories:

-

Polling the changes either periodically or based or an expiration of a “time-to-live“ value assigned to a cache entry

-

Detecting the change events directly at the sources (triggers or other methods)

Cache Overview

RadiantOne offers different caching options to accommodate a variety of deployment needs.

-

Memory Cache (Entry Cache and Query Cache)

-

Persistent Cache

The diagram below provides a general “rule of thumb” as to what type of cache to implement.

- Low volatility during the life of the cache (the time to live).

** Repetitive Queries – a query having exactly the same syntax (same user, same filter, same ACL).

*** Low Volume – The size of the cache as measured by (Nb entries * entry size * 2.5) cannot exceed the amount of memory allocated for cache.

For persistent cache, there is no limitation in terms of number of entries since everything is stored on disk. When fully indexed, the persistent cache provides performance levels comparable to the fastest “classic” LDAP directory.

Memory Cache

A memory cache (requires Expert Mode) can be configured for any virtual directory view and there are two different types of memory caching available: Entry Memory Cache and Query Memory Cache. They can be used together or individually.

If you plan on using both entry and query cache on the same view/branch, be aware that the query cache is searched first.

Configuring Entry Memory Cache

This model of caching leverages two types of memory: Main and Virtual. Main memory is the real memory where a certain number of most recently used entries reside. Virtual memory is memory on disk where all entries that exceed the amount allowed in the main memory reside. The swapping of entries from Virtual to Main memory (and vice versa) is managed by RadiantOne.

First, enable the Entry Memory Cache. In the Main Control Panel > Settings Tab > Server Front End section > Memory Cache sub-section (requires Expert Mode), on the right side, check the Entry Cache box. Click Save in the top right corner.

if you plan on caching (either entry memory cache or persistent cache) the branch in the tree that maps to an LDAP backend, you must list the operational attributes you want to be in the cache as “always requested”. Otherwise, the entry stored in cache would not have these attributes and clients accessing these entries may need them. For details on how to define attributes as “always requested” please see the RadiantOne System Administration Guide.

Entry cache is for caching every entry (a unique DN) of the specified tree. This kind of cache works well on trees where the volatility (update rate) is low (the likelihood of this data changing during the lifetime of this cache is low). This type of cache is optimized for and should only be used for finding specific entries (e.g. finding user entries during the “identification” phase of authentication) based on unique attributes that have been indexed in the cache setting, and base searches. The attributes you choose to index for the cache are very important because the value needs to be unique across all entries in the cache. For example, if you index the uid attribute, then all entries in the cache must have a unique uid (and be able to be retrieved from the cache based on this value). On the other hand, an attribute like postalcode would not be a good attribute to index (and search for entries based on) because more than one entry could have the same value for postalcode.

the DN attribute is indexed by default. DNs are unique for each entry which is the reason why base searches can be optimized with the entry cache.

For example, to populate/pre-fill the entry cache with unique user entries, you can preload with a query like:

ldapsearch -h localhost -p 2389 -D “uid=myuser,ou=people,dc=vds” -w secret -b “ou=people,dc=vds” -s sub (uid=*)

With this type of LDAP search, all entries (containing uid) are stored in the entry memory cache. Therefore, if a client then searched for:

ldapsearch -h localhost -p 2389 -D “uid=myuser,ou=people,dc=vds” -w secret -b “ou=people,dc=vds” -s sub (uid=lcallahan)

The entry could be retrieved from the entry cache and the underlying source would not need to be accessed.

Also, since all DNs in an LDAP tree are unique, base searches can benefit from entry cache. Continuing with the example above, if a client performed a base search on uid=lcallahan,ou=people,dc=vds, the entry could be retrieved from the entry cache.

Entry Memory Cache works for BASE searches on entries as well as on One Level and Sub Tree searches. However, for One Level and Sub Tree searches, whether the entry is returned from cache depends on whether the filter is "qualified" or not. Qualified means that the attribute in the filter is one that is indexed in your cache. Remember, only UNIQUE attributes can be indexed in your cache. You could index something like cn, which is fine if it is unique across all your entries. You cannot however index something like objectclass as more than one entry could be of the same objectclass.

For example, if your entry cache settings indexed the cn attribute, a search like the following (using the ldapsearch command line utility) doesn’t qualify to return the entry from entry cache even though it may be in the cache:

ldapsearch -h localhost -p 2389 -D "cn=directory manager" -w secret -b "cn=Laura Callahan,ou=Active Directory,dc=demo" -s sub (objectclass=*)

However, both of the following searches WOULD return the entry from the memory cache (because one uses a subtree search requesting a filter based on the indexed attribute, and one is a base search):

ldapsearch -h localhost -p 2389 -D "cn=directory manager" -w secret -b "cn=Laura Callahan,ou=Active Directory,dc=demo" -s sub "(cn=Laura Callahan)"

ldapsearch -h localhost -p 2389 -D "cn=directory manager" -w secret -b "cn=Laura Callahan,ou=Active Directory,dc=demo" -s base "(objectclass=*)"

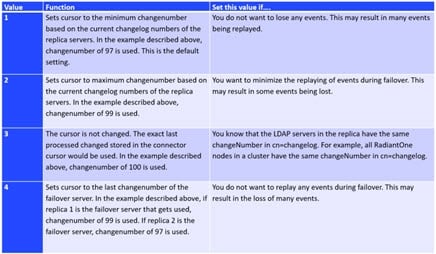

To configure an entry memory cache, follow the steps below (requires Expert Mode).

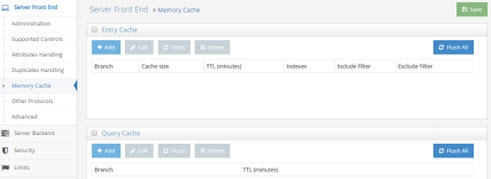

- On the Main Control Panel > Settings Tab > Server Front End section > Memory Cache sub-section, on the right side click on the Add button in the Entry Cache section.

- Select a starting point location in the virtual tree. All entries queried below this point are cached. The maximum number of entries allowed in the main memory is specified in the Number of Cache Entries parameter.

- Enter values for the Number of Cache Entries, Time to Live, Indexed attributes, and include/exclude filters. Details about these settings can be found below.

Figure 2.2: Entry Cache Settings

Time to Live

The amount of time that entries should remain in cache. After the time has been reached, the entry is removed from the cache. The next request for the entry is sent to the underlying data store(s). The result of the request is then stored in the memory cache again. This value is specified in minutes. The default value for this parameter is 60 (1 hour).

Indexes

Enter the attribute names in the cache that should be indexed. The values need to be separated with a comma. The attribute names must represent unique values for all entries across the entire cache. You must only index attributes that have unique values, otherwise the response from the cache can be unpredictable. For example, if you indexed the postalCode attribute, your first request with a filter of (postalCode=94947) may return 50 entries (because the query would be issued to and returned from the underlying source). However, your second request would only return 1 entry (because RadiantOne expects to find only one unique entry in the cache that matches a postalCode=94947, and this is typically the last entry that was added to the cache). If this functionality does not meet your needs, you should review the query cache and persistent cache options.

Include Filter

Enter a valid LDAP filter here that defines the entries that should be included in the cache. Only entries that match this filter are cached.

As an alternative approach, you can indicate what entries to exclude by using the Exclude filter described below.

Exclude Filter

Enter a valid LDAP filter here that defines the entries that should be excluded from the cache. All entries that match this filter are not cached.

As an alternative approach, you can indicate what entries to include by using the Include filter described above.

Number of Cached Entries

The total number of entries kept in main memory. The entry cache can expand beyond the main memory and the entries are swapped as needed. The default value for this parameter is 5000. This means that up to 5000 most recently used entries are put in the main memory cache. As the number of entries exceeds 5000, they are stored as virtual memory (memory on disk) and swapped as needed. The default value of 5000 is usually sufficient. However, if you would like to increase this number, you must make sure you have enough memory available on your machine and allocated to the RadiantOne Java Virtual Machine (see below for memory size requirements and check the RadiantOne Hardware Sizing Guide for global recommendations).

Cache Memory Size Requirements

For Entries

As a rule of thumb, you should take the average size of one of your entries and multiply by the number of entries you want to store in main memory. Then multiply this total number (the size for all entries) by 2.5. This gives you the amount of main memory you should allocate to store the entries.

For Indexes

This value is the total number of pages for each indexed attribute. The default size is 1000 pages. Which means there are, at most, 1000 index pages for each attribute you have indexed.

For each indexed attribute, the amount of memory consumed per page is calculated by taking the average size of an indexed value x 3 x 64.

You should keep in mind that dn is always indexed (although it doesn’t appear in the index list). Therefore, the dn attribute by itself consumes the following (assuming the dn is an average of 200 bytes in size): 200 x 3 x 64 = 38,400 bytes (approximately 39 KB per index page)

The default of 1000 index pages, consumes about (1000 x 39 KB) 39 MB in memory for the dn attribute.

Now, calculate the amount for each attribute you have indexed and add it to the 39 MB.

For example, if you index the attribute uid, and the average uid is 20 characters, you would have 20x3x64 = 3840 byes (approximately 4 KB per index page).

With 1000 index pages (1000 x 4 KB), about 4 MB in memory is consumed for the uid attribute.

If you have 10 attributes indexed (all on average of 20 characters), the total consumption of memory would be about 40 MB + 39 MB (for the dn attribute) for a total of 79 MB.

Total Memory Size Requirements

Add entry memory cache requirements and index memory cache requirements together to get the total memory size required for your cache.

Pay attention to the maximum Java virtual memory size for RadiantOne to ensure that you are allocating enough memory. Otherwise, out of memory errors can result. Please see the section on increasing the JVM size for RadiantOne for more details.

Configuring Query Cache

Query cache is sensitive to syntax. To benefit from the query cache, it must be the exact same query (from the same person, ACI, asking for the same information). This type of caching is good for repetitive queries (of the same nature).

Query cache is only applicable on naming contexts that are not configured as persistent cache.

First, enable the Query Memory Cache (requires Expert Mode).

-

On the Main Control Panel > Settings Tab > Front End section > Memory Cache sub-section, on the right side, check the box in the Query Cache section.

-

Click Add in the Query Cache section.

-

Select a starting point location in the RadiantOne namespace. All queries below this point are cached.

-

Enter a Time to Live (in minutes). This is the amount of time that entries should remain in cache. After the time has been reached, the entry is removed from the cache. The next request for the entry is sent to the underlying data store(s). The result of the request is then stored in the memory cache again. This value is specified in minutes. The default is 60 (1 hour).

-

Click OK.

-

Click Save (located in the top right hand corner) to save your settings.

The user and ACI information are also part of the query. This is why it was mentioned above that the query cache is sensitive to syntax. If User A issues a query, and then User B issued a query asking for the exact same information, this would count as two queries in the Query Cache.

Populating Entry Cache

The entry memory cache is filled as the RadiantOne service receives queries. The first time the server receives a request for an entry, the underlying data store(s) is queried and the entry is returned. The entry is stored in the entry memory cache. The entry remains in cache for the time specified in the Time to Live setting.

Populating Query Memory Cache

The query memory cache is filled as the RadiantOne service receives queries. The first time the server receives a request, the query is added to the query memory cache, and the underlying data store(s) is queried to retrieve the entries. The entries resulting from the query are also stored in the cache.

Refreshing the Memory Cache

A time-to-live parameter can be set for both the entry cache and the query cache. The time starts when the entry/query is added into memory. Once the time-to-live value is reached, the entry/query is removed from the cache. The next time a query is received for the entry, RadiantOne issues a query to the underlying store(s), retrieves the latest value and the entry is stored in the entry memory cache and/or the query memory cache again.

You also have the option to flush the entire memory cache from the Main Control Panel > Settings Tab > Server Front End section -> Memory Cache section (requires Expert Mode). On the right side, click on the “Flush All” button next to the type of cache you are interested in clearing.

Figure 2.3: Memory Cache Settings

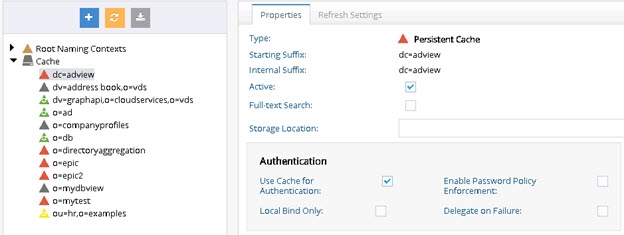

Persistent Cache

Persistent cache is the cache image stored on disk. With persistent cache, the RadiantOne service can offer a guaranteed level of performance because the underlying data source(s) do not need to be queried and once the server starts, the cache is ready without having to “prime” with an initial set of queries. Also, you do not need to worry about how quickly the underlying data source can respond. What is unique about the persistent cache is if the RadiantOne service receives an update for information that is stored in the cache, the underlying data source(s) receives the update, and the persistent cache is refreshed automatically. In addition, you have the option of configuring real-time cache refreshes which automatically update the persistent cache image when data changes directly on the backend sources. For more details, please see Refreshing the Persistent Cache.

If you plan on caching (either entry memory cache or persistent cache) the branch in the tree that maps to an LDAP backend, you must list the operational attributes you want to be in the cache as “always requested”. Otherwise, the entry stored in cache would not have these attributes and clients accessing these entries may need them.

Disk Space Requirements

Initialization of a persistent cache happens in two phases. The first phase is to create an LDIF formatted file of the cache contents (if you already have an LDIF file, you have the option to use this existing file as opposed to generating a new one). The second phase is to initialize the cache with the LDIF file. After the first phase, RadiantOne prepares the LDIF file to initialize the cache. Therefore, you need to consider at least these two LDIF files and the amount of disk space to store the entries in cache.

Best practice would be to take four times the size of the LDIF file generated to determine the disk space that is required to initialize the persistent cache. For example, lab tests have shown 50 million entries (1KB or less in size) generates an LDIF file approximately 50 GB in size. So total disk space recommended to create the persistent cache for this example would be 200 GB. See the RadiantOne Hardware Sizing Guide for general recommendations on disk space.

Data Statistics

To get statistics about the entries in your view, you can use the LDIFStatistics function of the <RLI_HOME>/bin/advanced/ldif-utils utility. Once you have an LDIF file containing your entries, pass the file name and path to the utility.

The results include the following statistics about entries (non-group), groups and objectclasses:

###### Entries statistics ######

Entry count – number of entries

Max attributes per entry

###### Non-group entry statistics ######

AVG attributes per entry

Max entry size in bytes

AVG entry size in bytes

Max attribute size

AVG attributes size (non-objectclass)

###### Groups statistics ######

Group count – number of group entries

Groups Statistics: [

### Groups SIZE_RANGE_NAME statistics ###

Group entry count

Max members

AVG members

Max entry size in bytes

AVG entry size in bytes

###### ObjectClass Statistics ######

### objectclass_name statistics ###

Entry count – number of entries

Max attributes per entry

AVG attributes per entry

Max entry size in bytes

AVG entry size in bytes

Max attribute size

AVG attributes size

RDN Types: [rdn_name]

Entry count per branch: {branch_dn=entrycount_x},

The following would be an example of the command and statistics returned.

C:\radiantone\vds\bin\advanced>ldif-utils LDIFStatistics -f

"C:\radiantone\vds\vds_server\ldif\export\mydirectory.ldif"

###### Entries statistics ######

Entry count: 10014

Max attributes per entry: 19

###### Non-group entry statistics ######

AVG attributes per entry: 8

Max entry size: 956 bytes

AVG entry size: 813 bytes

Max attribute size: 1

AVG attributes size (non-objectclass): 1

###### Groups statistics ######

Group count: 2

Groups Statistics: [

### Groups LESS_THAN_10 statistics ###

-Group entry count: 1

-Max members: 2

-AVG members: 2

-Max entry size: 578 bytes

-AVG entry size: 578 bytes,

### Groups BETWEEN_1K_AND_10K statistics ###

-Group entry count: 1

-Max members: 10000

-AVG members: 10000

-Max entry size: 571 KB

-AVG entry size: 571 KB]

###### ObjectClass Statistics ######

### organization statistics ###

-Entry count: 1

-Max attributes per entry: 8

-AVG attributes per entry: 8

-Max entry size: 403 bytes

-AVG entry size: 403 bytes

-Max attribute size: 1

-AVG attributes size: 1

-RDN Types: [o]

-Entry count per branch: {root=1},

`### groupofuniquenames statistics ###`

-Entry count: 1

-Max attributes per entry: 9

-AVG attributes per entry: 9

-Max entry size: 578 bytes

-AVG entry size: 578 bytes

-Max attribute size: 2

-AVG attributes size: 2

-RDN Types: [cn]

-Entry count per branch: {ou=groups,o=companydirectory=1},

### organizationalunit statistics ###

-Entry count: 11

-Max attributes per entry: 8

-AVG attributes per entry: 8

-Max entry size: 468 bytes

-AVG entry size: 439 bytes

-Max attribute size: 1

-AVG attributes size: 1

-RDN Types: [ou]

-Entry count per branch: {o=companydirectory=11},

### inetorgperson statistics ###

-Entry count: 10000

-Max attributes per entry: 19

-AVG attributes per entry: 19

-Max entry size: 956 bytes

-AVG entry size: 814 bytes

-Max attribute size: 1

-AVG attributes size: 1

-RDN Types: [uid]

-Entry count per branch: {ou=inventory,o=companydirectory=1000, ou=management,o=companydirectory=1000, ou=human resources,o=companydirectory=1000, ou=product development,o=companydirectory=1000, ou=accounting,o=companydirectory=1000, ou=information technology,o=companydirectory=1000, ou=customer service,o=companydirectory=1000, ou=sales,o=companydirectory=1000, ou=quality assurance,o=companydirectory=1000, ou=administration,o=companydirectory=1000},

### groupofurls statistics ###

-Entry count: 1

-Max attributes per entry: 9

-AVG attributes per entry: 9

-Max entry size: 571 KB

-AVG entry size: 571 KB

-Max attribute size: 10000

-AVG attributes size: 10000

-RDN Types: [cn]

-Entry count per branch: {ou=groups,o=companydirectory=1}]

Done in 1169ms

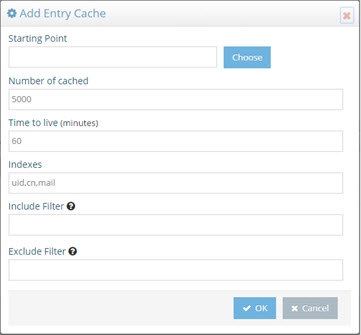

Initializing Persistent Cache

Persistent cache should be initialized during off-peak hours, or during scheduled downtime, since it is a CPU-intensive process and during the initialization queries are delegated to the backend data sources which might not be able to handle the load.

When initializing persistent cache, two settings you should take into consideration are paging and initializing cache from an encrypted file. These options are described in this section.

If you are using real-time refresh, make sure the cache refresh components are stopped before re-initializing or re-indexing a persistent cache.

Using Parallel Processing Engine

In some cases, the virtual engine parallel processor (vpp) can be used to speed up the cache image creation while indexing. This option can only be used when initializing persistent cache with the vdsconfig utility, init-pcache command. This is useful when the virtual view to be cached contains many entries and other time-intensive configurations like joins, and computed attributes involving lookups. This option isn’t compatible with views associated with interception scripts. Initializing a view that is incompatible with the -vpp command results in an error that indicates why the view is incompatible. See the Radiantone Command Line Configuration Guide for details on the init-pcache command.

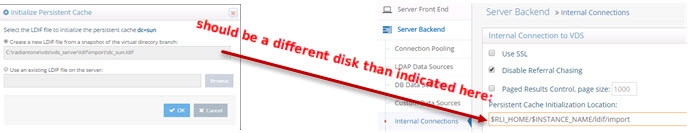

Using Paging

Depending on the complexity of the virtual view, building the persistent cache image can take some time. Since the internal connections used by RadiantOne to build the persistent cache image are subject to the Idle Connection Timeout server setting, the cache initialization process might fail due to the connection being automatically closed by the server. To avoid cache initialization problems, it is recommended to use paging for internal connections. To use paging:

-

Navigate to the Main Control Panel -> Settings tab -> Server Front End -> Supported Controls.

-

On the right, check the option to Enable Paged Results.

-

Click Save.

-

Navigate to the Main Control Panel > Settings tab > Server Backend > Internal Connections (requires Expert Mode).

-

On the right, check the option for Paged Results Control, page size: 1000.

-

Click Save.

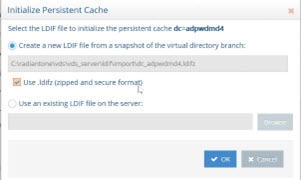

Supporting Zipped and Encrypted LDIF Files

If you are initializing persistent cache using an existing LDIFZ file, the security key used in RadiantOne (for attribute encryption) where the file was exported must be the same security key value used on the RadiantOne server that you are trying to import the file into.

If you are creating a new LDIF file to initialize the persistent cache, you have the option to use an LDIFZ file which is a zipped and encrypted file format. This ensures that the data to be cached is not stored in clear in files required for the initialization process.

To use this option, you must have an LDIFZ encryption key configured. The security key is defined from the Main Control Panel > Settings Tab > Security > Attribute Encryption section.

Once the security key has been defined, check the option to “Use .ldifz (zipped and secure format).

Figure 2.4: Using LDIFZ File to Initialize Persistent Cache

Options for Refreshing the Persistent Cache

There are four categories of events that can invoke a persistent cache refresh. They are:

-

When changes occur through RadiantOne.

-

When changes occur outside of RadiantOne (directly on the backend source).

-

Scheduling a periodic refresh of the persistent cache.

-

Manually triggering a persistent cache refresh.

Each is described below.

Changes Occurring Through RadiantOne

If RadiantOne receives an update for an entry that is stored in a persistent cache, the following operations occur:

-

The entry in persistent cache is “locked” pending the update to the underlying source(s).

-

The underlying source(s) receives the update from RadiantOne.

-

Upon successful update of the underlying source(s), RadiantOne updates the entry in the persistent cache.

-

The modified entry is available in the persistent cache.

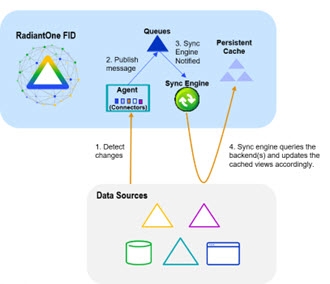

Real Time Cache Refresh Based on Changes Occurring Directly on the Backend Source(s)

When a change happens in the underlying source, connectors capture the change and send it to update the persistent cache. The connectors are managed by agents built into RadiantOne and changes flow through a message queue for guaranteed message delivery. The real-time refresh process is outlined below.

Figure 2.5: Persistent Cache Refresh Architecture

Persistent Cache Refresh Agents are started automatically once a persistent cache with real-time refresh is configured. Agents can run on any type of RadiantOne cluster node (follower or leaders) and there is only one agent running at any given time in a RadiantOne cluster. The agent doesn't consume a lot of memory, and they are not CPU-intensive, so there is no point in running multiple processes to distribute connectors on multiple nodes. One agent is enough per cluster and makes things simpler.

This type of refresh is described as “Real-time” in the Main Control Panel > Directory Namespace > Cache settings > Cache Branch > Refresh Settings tab (on the right). This is the recommended approach if a real-time refresh is needed.

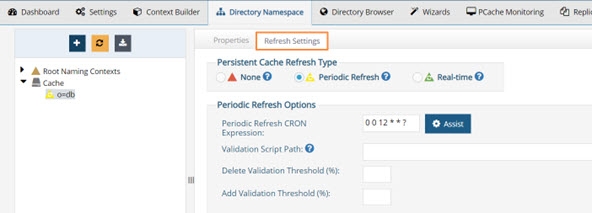

Periodic Refresh

In certain cases, if you know the data in the backends does not change frequently (e.g. once a day), you may not care about refreshing the persistent cache immediately when a change is detected in the underlying data source. In this case, a periodic refresh can be used.

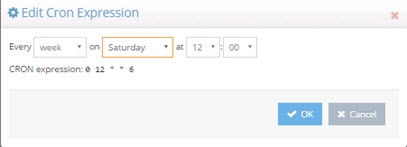

If you have built your view in either the Context Builder tab or Directory Namespace Tab, you can define the refresh interval after you’ve configured the persistent cache. The option to enable periodic refresh is on the Refresh Settings tab (on the right) for the selected persistent cache node. Once the periodic refresh is enabled, configure the interval using a CRON expression. Click the Assist button if you need help defining the CRON expression.

Figure 2.6: Periodic Cache Refresh Settings

During each refresh interval, the periodic persistent cache refresh is performed based on the following high-level steps:

-

RadiantOne generates an LDIF formatted file from the virtual view (bypassing the cache).

WarningIf a backend data source is unreachable, RadiantOne attempts to re-connect one more time after waiting 5 seconds. The number of retries is dictated by the maxPeriodicRefreshRetryCount property defined in /radiantone/v1/cluster/config/vds_server.conf in ZooKeeper.

-

(Optional) If a validation threshold is defined, RadiantOne determines if the threshold defined has been exceeded. If it has, the persistent cache is not refreshed during this cycle.

-

(Optional) If a validation script is defined, RadiantOne invokes the script logic. If the validation script is successful, RadiantOne updates the cache. If the validation script is unsuccessful, RadiantOne does not update the persistent cache during this cycle.

-

RadiantOne compares the LDIF file generated in step 1 to the current cache image and applies changes to the cache immediately as it goes through the comparison.

The periodic persistent cache refresh activity is logged into <RLI_HOME>/vds_server/logs/periodiccache.log. For details on this log, see the RadiantOne Logging and Troubleshooting Guide.

The rebuild process can be very taxing on your backends, and each time a new image is built you are putting stress on the data sources. This type of cache refresh deployment works well when the data doesn’t change too frequently and the volume of data is relatively small.

Manually Trigger a Persistent Cache Refresh

You can manually initiate a persistent cache refresh that leverages the same methodology as a periodic refresh with the following command (substitute your cached naming context for <pcache naming>).

You can manually trigger a persistent cache refresh with the method described in this section no matter what kind of refresh strategy has been configured (e.g. none, periodic or real-time).

C:\radiantone\vds\bin>vdsconfig.bat search-vds -dn "action=deltarefreshpcache,<pcache naming>" -filter "(objectclass=*)" -leader

The log containing the refresh actions performed is <RLI_HOME>/vds_server/logs/periodiccache.log.

For more details on the search-vds command, see the Radiantone Command Line Configuration Guide.

Configuring Persistent Cache with Periodic Refresh

Review the section on periodically refreshing the cache to ensure the persistent cache is updated to match your needs. If you plan on refreshing the cache image periodically on a defined schedule, this would be the appropriate cache configuration option. This type of caching option leverages the internal RadiantOne Universal Directory storage for the cache image.

To configure persistent cache with Periodic refresh

-

On the Directory Namespace tab of the Main Control Panel, click the Cache node.

-

On the right side, browse to the branch in the RadiantOne namespace that you would like to store in persistent cache and click OK.

-

Click Create Persistent Cache. The configuration process begins. Once it completes, click OK to exit the window.

-

Click the Refresh Settings tab.

-

Select the Periodic Refresh option.

-

Enter the CRON expression to define the refresh interval.

-

(Optional) Define a Validation Script Path.

-

(Optional) Define a Delete Validation Threshold.

-

(Optional) Define an Add Validation Threshold.

-

Click Save.

-

Click Initialize to start the initialization process.

There are two options for initializing the persistent cache: Creating a new LDIF file or initializing from an existing LDIF file. Each is described below.

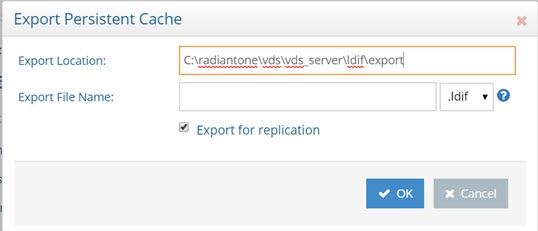

Create an LDIF from a Snapshot

If this is the first time you’ve initialized the persistent cache, then you should choose this option. An LDIF formatted file is generated from the virtual view and then imported into the local RadiantOne Universal Directory store.

Initialize from an Existing LDIF File

If you’ve initialized the persistent cache before and the LDIF file was created successfully from the backend source(s) (and the data from the backend(s) has not changed since the generation of the LDIF file), then you can choose to use that existing file. The persisting of the cache occurs in two phases. The first phase generates an LDIF file with the data returned from the queries to the underlying data source(s). The second phase imports the LDIF file into the local RadiantOne Universal Directory store. If there is a failure during the second phase, and you must re-initialize the persistent cache, you have the option to choose the LDIF file (that was already built during the first phase) instead of having to re-generate it (as long as the LDIF file generated successfully). You can click browse and navigate to the location of the LDIF. The LDIF files generated are in <RLI_HOME><instance_name>\ldif\import.

If you have a large data set and generated multiple LDIF files for the purpose of initializing the persistent cache (each containing a subset of what you want to cache), name the files with a suffix of “_2”, “_3”…etc. For example, let’s say the initial LDIF file (containing the first subset of data you want to import) is named cacheinit.ldif. After this file has been imported, the process attempts to find cacheinit_2.ldif, then cacheinit_3.ldif…etc. Make sure all files are located in the same place so the initialization process can find them.

After you choose to either generate or re-use an LDIF file, click Finish and cache initialization begins. Cache initialization is launched as a task and can be viewed and managed from the Tasks Tab in the Server Control Panel associated with the RadiantOne leader node. Therefore, you do not need to wait for the initialization to finish before exiting the initialization window.

After the persistent cache is initialized, queries are handled locally by the RadiantOne service and no longer be sent to the backend data source(s). For information about properties associated with persistent cache, please see Persistent Cache Properties.

Periodic Refresh CRON Expression

If periodic refresh is enabled, you must define the refresh interval in this property. For example, if you want the persistent cache refreshed every day at 12:00 PM, the CRON expression is: 0 0 12 1/1 * ? *

Click Assist if you need help defining the CRON expression.

Figure 2.7: CRON Expression Editor

Validation Script Path

For details on how the periodic persistent cache refresh process works, see Periodic Refresh.

You can apply a script to validate the generated LDIF file/image prior to RadiantOne executing the cache refresh process. If the LDIF file (input) doesn’t meet the validation requirements, the persistent cache refresh is aborted for this refresh cycle.

In the Validation Script Path property, you can enter the script name. The type of script should be a shell script (.sh) or batch script (.bat) file. If just a script name is defined (or a relative path and script name), it is assumed the script is in the <RLI_HOME>/vds_server/custom folder. This location is shared across cluster nodes automatically. If an absolute path is defined for this property, this location must be a shared drive/location across all cluster nodes.

The image validation process takes the generated LDIF file name as an input. This function scans the LDIF file, can make changes if needed, and then returns an exit/error code to indicate whether RadiantOne should proceed with the cache refresh. If the exit code=0, the service continues with the refresh process. If the exit code is not=0, the cache refresh is aborted.

Delete Validation Threshold

For details on how the periodic persistent cache refresh process works, see Periodic Refresh.

You can define a threshold to validate the generated LDIF file/image prior to RadiantOne executing the cache refresh process. The threshold is a percentage of the total entries.

To define a granular threshold for delete operations, indicate the percentage in the Delete Validation Threshold. For example, if Delete Validation Threshold contains a value of 50, it means if the generated LDIF image contains at least 50% fewer entries than the current cache image, the periodic persistent cache refresh is aborted for the current refresh cycle.

If both a validation script and validation threshold are configured, the threshold is checked first. If the threshold does not invalidate the refresh, the validation script is invoked. If neither a threshold nor a script is configured, RadiantOne compares the generated LDIF file to the current cache image and updates the cache based on the differences between the two.

Add Validation Threshold

For details on how the periodic persistent cache refresh process works, see Periodic Refresh.

You can define a threshold to validate the generated LDIF file/image prior to RadiantOne executing the cache refresh process. The threshold is a percentage of the total entries.

To define a granular threshold for add operations, indicate the percentage in the Add Validation Threshold. For example, if Add Validation Threshold contains a value of 50, it means if the generated LDIF image contains 50% more entries than the current cache image, the periodic persistent cache refresh is aborted for the current refresh cycle.

If both a validation script and validation threshold are configured, the threshold is checked first. If the threshold does not invalidate the refresh, the validation script is invoked. If neither a threshold nor a script is configured, RadiantOne compares the generated LDIF file to the current cache image and updates the cache based on the differences between the two.

Configuring Persistent Cache with Real-Time Refresh

If you plan on automatically refreshing the persistent cache as changes happen on the backend data sources, this would be the recommended cache configuration option. This type of caching option leverages the RadiantOne Universal Directory storage for the cache image.

If you choose a real-time refresh strategy, there are two terms you need to become familiar with:

-

Cache Dependency – cache dependencies are all objects/views related to the view that is configured for persistent cache. A cache dependency is used by the cache refresh process to understand all the different objects/views that need to be updated based on changes to the backend sources.

-

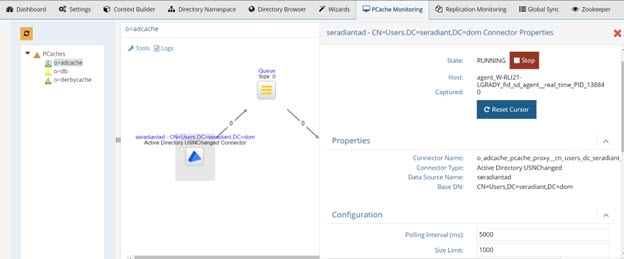

Cache Refresh Topology – a cache refresh topology is a graphical representation of the flow of data needed to refresh the cache. The topology includes an object/icon that represents the source (the backend object where changes are detected from), the queue (the temporary storage of the message), and the cache destination. Cache refresh topologies can be seen from the Main Control Panel > PCache Monitoring tab.

Cache dependencies and the refresh topology are generated automatically during the cache configuration process.

If you have deployed multiple nodes in a cluster, to configure and initialize the persistent cache, you must be on the current RadiantOne leader node. To find out the leader status of the nodes, go to the Dashboard tab > Overview section in the Main Control Panel and locate the node with a yellow triangle icon.

To configure persistent cache with real-time refresh:

-

Go to the Directory Namespace Tab of the Main Control Panel associated with the current RadiantOne leader node.

-

Click the Cache node.

-

On the right side, browse to the branch in the RadiantOne namespace that you would like to store in persistent cache and click OK.

WarningFor proxy views of LDAP backends, you must select the root level to start the cache from. Caching only a sub-container of a proxy view is not supported.

-

Click Create Persistent Cache. The configuration process begins. Once it completes, click OK to exit the window.

-

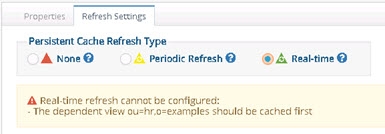

On the Refresh Settings tab, select the Real-time refresh option.

WarningIf your virtual view is joined with other virtual views you must cache the secondary views first. Otherwise, you are unable to configure the real-time refresh and will see the following message. A Diagnostic button is also shown and provides more details about which virtual views require caching.

Figure 2.8: Caching secondary views message

-

Configure any needed connectors. Please see the section titled Configuring Source Connectors for steps.

-

Click Save.

-

On the Refresh Settings tab, click Initialize to initialize the persistent cache.

There are two options for initializing a persistent cache. Each is described below.

Create an LDIF File

If this is the first time you’ve initialized the persistent cache, choose this option. An LDIF formatted file is generated from the virtual view and then imported into the cache.

Using an Existing LDIF

If you’ve initialized the persistent cache before and the LDIF file was created successfully from the backend source(s) (and the data from the backend(s) has not changed since the generation of the LDIF file), then you can choose this option to use that existing file. The persisting of the cache occurs in two phases. The first phase generates an LDIF file with the data returned from the queries to the underlying data source(s). The second phase imports the LDIF file into the local RadiantOne Universal Directory store. If there is a failure during the second phase, and you must re-initialize the persistent cache, you have the option to choose the LDIF file (that was already built during the first phase) instead of having to re-generate it (as long as the LDIF file generated successfully). You can click browse and navigate to the location of the LDIF. The LDIF files generated are in <RLI_HOME><instance_name>\ldif\import.

If you have a large data set and generated multiple LDIF files for the purpose of initializing the persistent cache (each containing a subset of what you want to cache), name the files with a suffix of “_2”, “_3”…etc. For example, let’s say the initial LDIF file (containing the first subset of data you want to import) is named cacheinit.ldif. After this file has been imported, the process attempts to find cacheinit_2.ldif, then cacheinit_3.ldif…etc. Make sure all files are located in the same place so the initialization process can find them.

-

Click OK. The cache initialization process begins. The cache initialization is performed as a task and can be viewed and managed from the Tasks Tab in the Server Control Panel associated with the RadiantOne leader node. Therefore, you do not need to wait for the initialization to finish before exiting the initialization window.

-

The view(s) is now in the persistent cache. Queries are handled locally by RadiantOne and are no longer sent to the backend data source(s). Real-time cache refresh has been configured. For information about properties associated with persistent cache, please see Persistent Cache Properties.

Configuring Source Connectors Overview

Configuring connectors involves deciding how you want to detect changes from your backend(s). By default, all directory connectors and custom connectors (only custom connectors included in the RadiantOne install) are configured and started immediately without further configuration. For databases, configure the connector to use the desired change detection mechanism.

All connectors leverage the connection pooling settings defined from the Main Control Panel > Settings tab. In other words, the connector opens a connection to the data source to pick up changes and keeps the connection open so when the next interval passes a new connection does not need to be created.

Configuring Source Database Connectors

For database backends (JDBC-accessible), the change detection options are:

-

Changelog – This connector type relies on a database table that contains all changes that have occurred on the base tables (that the RadiantOne virtual view is built from). This typically involves having triggers on the base tables that write into the log/changelog table. However, an external process may be used instead of triggers. The connector picks up changes from the changelog table based on a specified interval which is 10 seconds by default. If you need assistance with configuring triggers on the base tables and defining the changelog table, see Script to Generate Triggers.

-

Timestamp – This connector type has been validated against Oracle, SQL Server, MySQL, MariaDB, PostgreSQL, and Apache Derby. The database table must have a primary key defined for it and an indexed column that contains a timestamp/date value. This value must be maintained and modified accordingly for each record that is updated.

For Oracle databases, the timestamp column type must be one of the following: "TIMESTAMP", "DATE", "TIMESTAMP WITH TIME ZONE", "TIMESTAMP WITH LOCAL TIME ZONE".

For SQL Server database, the timestamp column type must be one of the following: "SMALLDATETIME", "DATETIME", "DATETIME2"

For MYSQL or MariaDB databases, the timestamp column type must be one of the following: "TIMESTAMP", "DATETIME"

For PostgreSQL databases, the timestamp column type must be one of the following: "TIMESTAMP", "timestamp without time zone” (equivalent to timestamp), “TIMESTAMPTZ”, “timestamp with time zone” (equivalent to timestamptz)

For Derby databases, the timestamp column type must be: "TIMESTAMP"

For DB2 databases, the timestamp column type must be: “TIMESTAMP”

The DB Timestamp connector leverages the timestamp column to determine which records have changed since the last polling interval. This connector type does not detect delete operations. If you have a need to detect and propagate delete operations from the database, you should choose a different connector type like DB Changelog or DB Counter.

-

Counter - This connector type is supported for any database table that has an indexed column that contains a sequence-based value that is automatically maintained and modified for each record that is added/updated. This column must be one of the following types: BIGINT, DECIMAL, INTEGER, or NUMERIC. If DECIMAL or NUMERIC are used, they should be declared without numbers after the dot: DECIMAL(6,0) not as DECIMAL(6,2). The DB Counter connector leverages this column to determine which records have changed since the last polling interval. This connector type can detect delete operations as long as the table has a dedicated “Change Type” column that indicates one of the following values: insert, update, delete. If the value is empty or something other than insert, update, or delete, an update operation is assumed.

If none of these options are useable with your database, use a periodic cache refresh instead of real-time.

DB Changelog

RadiantOne can generate the SQL scripts which create the configuration needed to support the DB Changelog Connector. The scripts can be generated in the Main Control Panel or from command line. Both options store the scripts under <RLI_HOME>/work/sql. The following scripts are generated.

-

create_user.sql – Reminds you to have your DBA manually create a user account to be associated with the connector.

-

create_capture.sql - Creates the log table and the triggers on the base table.

-

drop_capture.sql - Drops the triggers and the log table.

Note: for some databases the file is empty.

- drop_user.sql - Drops the log table user and schema.

Note: for some databases the file is empty.

Connector Configuration:

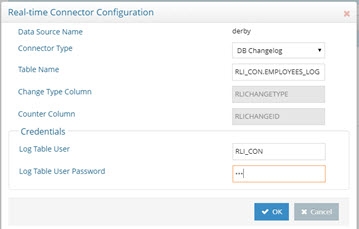

This section describes generating and executing the scripts in the Main Control Panel. The following steps assume the database backend has a changelog table that contains changed records that need to be updated in the persistent cache. The changelog table must have two key columns named RLICHANGETYPE and RLICHANGEID. RLICHANGETYPE must indicate insert, update or delete, dictating what type of change was made to the record. RLICHANGEID must be a sequence-based, auto-incremented INTEGER that contains a unique value for each record. The DB Changelog connector uses RLICHANGEID to maintain a cursor to keep track of processed changes.

If you need assistance with configuring triggers on the base tables and defining the changelog table, see Script to Generate Triggers and Changelog Table.

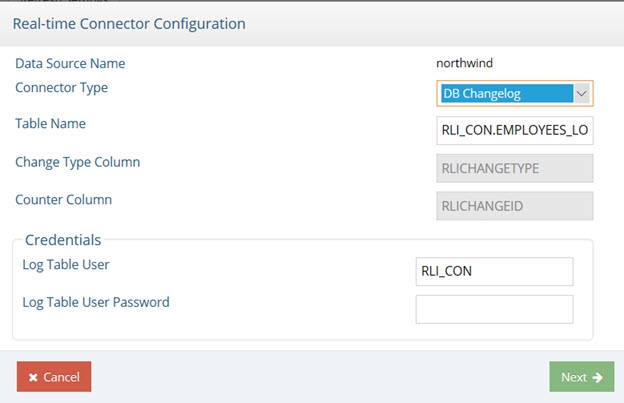

To configure DB Changelog connector:

These instructions assume you want to apply the SQL scripts immediately and you already have a user account in the database to use for the connector.

- From the Main Control Panel > Directory Namespace Tab, select the configured persistent cache branch below the Cache node.

- On the right side, select the Refresh Settings tab.

- When the Real-time refresh type is selected, the connectors appear in a table below. Select a connector and click Configure.

- Select DB Changelog from the Connector Type drop-down list.

5.Enter the log table name using the proper syntax for your database (e.g.

>[!warning] Change the value for this property only if you are creating the log table manually and the capture connector does not calculate the log table name correctly. Be sure to use the [correct syntax](#log-table-name-syntax) if you change the value.

-

Indicate the user name and password for the connector’s dedicated credentials for connecting to the log table. If you do not have the user name and password, contact your DBA for the credentials. An example is shown below.

Figure 2.9: DB Changelog Connector Configuration

-

Click Next.

-

When the connector has been configured, click Next again.

-

Select Apply Now. Click Next.

NoteSelecting Apply Now creates and executes the SQL scripts. If you choose to apply later, the scripts are created but not executed.

-

Click Next and then click Finish.

-

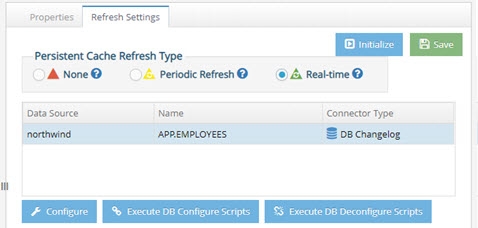

After all connectors are configured, click Save.

NoteThe Execute DB Configure Scripts and Execute DB Deconfigure Scripts buttons become available when you finish configuring the connector. Execute DB Configure Scripts runs create_capture.sql. Execute DB Deconfigure Scripts runs drop_capture.sql. The location that RadiantOne looks for these scripts in cannot be changed.

Figure 2.10: The Execute DB Configure and Deconfigure buttons

-

Go to Main Control Panel > PCache Monitoring tab to start connectors, configure connector properties and manage and monitor the persistent cache refresh process.

If you make changes to the DB Changelog Connector configuration, restart the connector on the PCache Monitoring tab. Select the icon representing the database backend and click Stop. Then click Start to restart it.

Log Table Name Syntax

Proper syntax for the Log Table Name must include both the schema name and the table name separated with a period. Values for this property may contain quote marks as required by the database. In most cases, the double quote mark (“) is used, but some databases use a single quote (‘) or back quote (`). The following examples explain the property’s syntax and usage.

Example 1:

For Postgres, if the schema is rli_con, and log table name is test_log, the property should be one of the following.

NOTE – by default, Postgres uses lower-case table names.

rli_con.test_log

or with optional quoting:

"rli_con"."test_log"

Example 2:

For SQL Server, if the schema is RLI_CON, and log table name is TEST_LOG, the property should be one of the following.

By default, many databases, including SQL Server, use upper-case table names.

RLI_CON.TEST_LOG

Or with optional quoting:

"RLI_CON"."TEST_LOG"

If this name is the same as the log name in the database, leave the property empty.

Example 3:

If schema and/or table name contain mixed-case characters, they must be quoted. For example, if the schema is Rli_Con, and log table name is Test_Log, the property should be as follows.

"Rli_con"."Test_log"

Create Scripts to Generate Triggers and Changelog Table

If the database backend doesn’t have a changelog table, you can use RadiantOne to create one. RadiantOne can generate SQL scripts that a DBA can run on the database backend. These scripts create the needed configuration to support the DB Changelog connector. Use <RLI_HOME>/bin/advanced/create_db_triggers.bat to generate the scripts. The command uses seven arguments (which are described below) and generates the SQL script needed to configure the database to support the DB Changelog connector.

These scripts can be provided to the database backend DBA to review, modify and execute on the database server. Scripts generated using this command cannot be executed in the Main Control Panel.

Example:

create_db_triggers.bat -d sql123 -n sql_server_data_source -t DBO.EMPLOYEES -u rli_con -p rli_con -l EMPLOYEES_LOG

Based on this example, the command generates scripts at the following location: <RLI_HOME>/work/sql/sql123/

The RadiantOne data source name is sql_server_data_source (it must exist prior to running the command).

The base table name is EMPLOYEES in the DBO schema.

The log table user to be created is: rli_con with a password of: rli_con

The log table name is EMPLOYEES_LOG.

The table below outlines the available arguments.

Argument | Description |

|---|---|

-d | The name of the folder where the scripts are saved (e.g. sql1). The name should not contain any special or path characters (e.g. |

-n | The RadiantOne data source name. The data source contains information (JDBC connection string, user, and password) that is used to connect to the database and read the base table schema. The credentials defined in the data source must have permission to read the base table schema. Note - the data source must exist prior to using the command. |

-t | The base table name. The name of the table is used to create the create_capture.sql and the drop_capture.sql scripts. The base table name should be in the form: SCHEMA.TABLE_NAME, for example DBO.CUSTOMERS. SCHEMA and TABLE_NAME should not contain special characters (e.g. [ ]`".), should not be quoted, and should be in the proper upper/lower case (depends on the database type/vendor). |

-u | The log table user. The create_user.sql script includes commands to create a log table user/owner and the log table schema (which will have the same name as the log table user). The log table user is created with the password assigned by the -p option. |

-l | Specify the log table name instead of using default computation based on full base table name. |

-s | Specify the log table schema name. |

DB Timestamp

The following steps assume your backend database table has a primary key defined and contains a timestamp column. The timestamp column name is required for configuring the connector. The timestamp column database types supported are described in the Database Connectors section.

this connector type does not detect delete operations. If you need to detect delete operations from the database, you should choose a different connector type.

-

From the Main Control Panel > Directory Namespace Tab, select the configured persistent cache branch below the Cache node.

-

On the right side, select the Refresh Settings tab.

-

When the Real-time refresh type is selected, the connectors appear in a table below. Select a connector and click Configure.

-

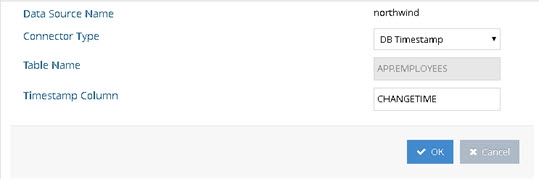

Select DB Timestamp from the Connector Type drop-down list.

-

Indicate the column name in the database table that contains the timestamp. An example is shown below.

Figure 2.11: DB Timestamp Connector Configuration

-

Click OK.

-

After all connectors are configured, click Save.

-

The connectors are started automatically once they are configured.

-

Go to Main Control Panel > PCache Monitoring tab to configure connector properties and manage and monitor the persistent cache refresh process.

If you need to make changes to the timestamp column name, manually restart the connector and reset the cursor. This can be done from the PCache Monitoring tab. Select the icon representing the database backend and click Stop. Then click Start to restart it. Then click Reset Cursor.

DB Counter

The following steps assume your database backend table contains an indexed column that contains a sequence-based value that is automatically maintained and modified for each record that is added, updated or deleted. The DB Counter connector uses this column to maintain a cursor to keep track of processed changes. The counter column database types supported are described in the Database Connectors section.

-

From the Main Control Panel > Directory Namespace Tab, select the configured persistent cache branch below the Cache node.

-

On the right side, select the Refresh Settings tab.

-

When the Real-time refresh type is selected, the connectors appear in a table below. Select a connector and click Configure.

-

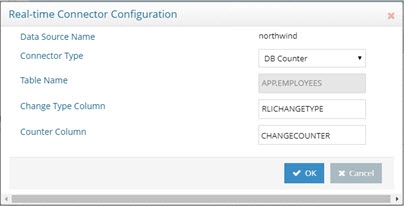

Select DB Counter from the Connector Type drop-down list.

-

Enter a value in the Change Type Column field. This value should be the database table column that contains the information about the type of change (insert, update or delete). If the column doesn’t have a value, an update operation is assumed.

-

Enter the column name in the database table that contains the counter. An example is shown below.

Figure 2.12: DB Counter Connector Configuration

-

Click OK.

-

After all connectors are configured, click Save.

-

The connectors are started automatically once they are configured.

-

Go to Main Control Panel > PCache Monitoring tab to configure connector properties and manage and monitor the persistent cache refresh process.

If you need to make changes to the Counter Column name, manually restart the connector and reset the cursor. This can be done from the PCache Monitoring tab. Select the icon representing the database backend and click Stop. Then click Start to restart it. Then click Reset Cursor.

DB Kafka

The Apache Kafka Consumer API allows applications to subscribe to one or more topics and process the stream of records produced from them. Persistent cached virtual views from Oracle databases that use the GoldenGate Kafka Handler, can leverage the RadiantOne Kafka capture connector to detect changes for real-time refresh.

Oracle GoldenGate messages are the only format currently supported with the Kafka capture connector for persistent cache refresh.

After a persistent cache is configured for the virtual view from the Oracle database, on the Refresh Settings tab, select the Real-time refresh option.

-

Select the object representing the Oracle table/view and click Configure.

-

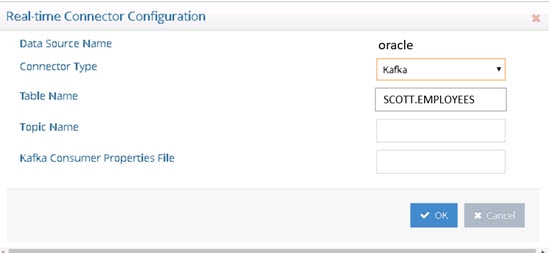

Select Kafka as the Connector Type from the drop-down list.

-

Enter the Kafka Topic Name (e.g. TopicEmpTable).

-

Enter the full path to the Kafka Consumer Properties File (e.g. C:\Downloads\kafka\consumer.properties).

-

Click OK.

-

Click Save.

Figure 2.13: Kafka Connector for Persistent Cache Refresh

Database Connector Failover

This section describes the failover mechanism for the database connectors.

The backend servers must be configured for multi-master replication. Please check the vendor documentation for assistance with configuring replication for your backends.

The database connectors leverage the failover server that has been configured for the data source. When you configure a data source for your backend database, select a failover database server from the drop-down list. The failover server must be configured as a RadiantOne data source. See the screen shot below for how to indicate a failover server for the Data Sources from the Main Control Panel.

Figure 2.14: Configuring Failover Servers for the Backend Database

If a connection cannot be made to the primary server, the connector tries to connect to the failover server configured in the data source. If a connection to both the primary and failover servers fails, the retry count goes up. The connector repeats this process until the value configured in Max Retries on Connection Error is reached. There is no automatic failback, meaning once the primary server is back online, the connector doesn’t automatically go back to it.

Re-configuring Database Connectors

By re-configuring the connector, you can change the connector type.

The connector can be re-configured from the Main Control Panel > Directory Namespace Tab. Navigate below the Cache node and select the persistent cache branch configured for auto-refresh. On the right side, select the Refresh Settings tab. Select the connector you want to re-configure and choose Configure.

To change the connector user password, for a connector currently using DB Changelog, enter the user name and password in the Credentials section.

Figure 2.15: Editing DB Changelog Connector Configuration

To change the detection mechanism from DB Changelog to another method, select the type from the Connector Type drop-down menu. Enter values as needed for the properties specific to the new connector type and click Next. Click Next in the confirmation window to confirm that you want the connector reconfigured. Click Next to confirm that the connector has been reconfigured. Click Finish.

Directory Connectors Overview

For directory backends (LDAP-accessible including RadiantOne Universal Directory and Active Directory), the default connectors are configured and started automatically. Go to Main Control Panel > PCache Monitoring tab to configure connector properties and manage and monitor the persistent cache refresh process.

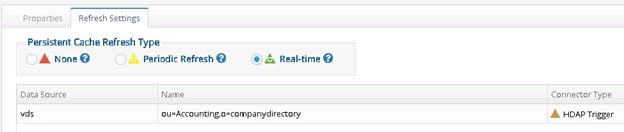

If you are using a persistent cache on a proxy view of a local RadiantOne Universal Directory store, or a nested persistent cache view (a cached view used in another cached view), the connector type is noted as HDAP Trigger. This is a special trigger mechanism that publishes the changes directly into the queue to automatically invoke the refresh to all associated persistent cache layers. This change detection mechanism doesn’t require a connector process (or agents). If a RadiantOne service is virtualizing an external (non-local) RadiantOne Universal Directory store, and a persistent cache is configured for the view, this is considered an “LDAP backend” and the refresh connector can be configured for either changelog or persistent search (whatever is enabled/supported on the remote RadiantOne server) as described below.

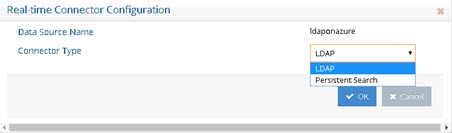

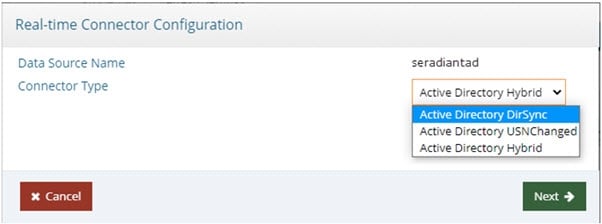

LDAP Directories